math

yeah the math stuff

Calculus

tags math calculus

Differential

example

intergrant

example

Taylor series 泰勒展開式

find sin(x) Taylor series

Formal Language

tags: math

automata

- DFA(Deterministic Finite Automation)

- NFA(Nondeterministic Finite Automation)

- PDA(Push Down Automation)

DFA / NFA

DFA

regular language

regular expression

closure properties

pumping lemma for regular languages

DFA == regular language

For every NFA there is a regular expression such that

L(q,p,k){w ∈ | there is a run of M on w from state q to state p and let passing through states set length < k}.

DFA to regular language

state removal method

brzozowski algebraic method

PDA

context free language

pumping lemma for CFL

closure properties

Formal Language

tags: math

automata

- DFA(Deterministic Finite Automation)

- NFA(Nondeterministic Finite Automation)

- PDA(Push Down Automation)

DFA / NFA

DFA

regular language

regular expression

closure properties

pumping lemma for regular languages

DFA == regular language

For every NFA there is a regular expression such that

L(q,p,k){w ∈ | there is a run of M on w from state q to state p and let passing through states set length < k}.

DFA to regular language

state removal method

brzozowski algebraic method

PDA

context free language

pumping lemma for CFL

closure properties

hw1

1.a

1.b

1.c

1.d

1.e

2.a

2.b

3.a

3.b

3.c

3.d

3.e

4.a

4.b

4.c

5

If is regular, then is also regular because is but remove the final state that be passing though another final state.

for example

6.a

6.b

6.c

6.d

6.e

6.f

6.g

If is regular language then it can convert to DFA.

Divide a DFA to equeal part still are DFA which make it still regular.

6.h

6.i

6.j

not regular

7.a

7.b

7.c

7.d

8.a

8.b

8.c

8.d

is DFA by definition

is base on but modify function and remove some state and final state. So it still a DFA

8.e

9.a

Each symbols need 2 state to record is even or odd state and additional one state for init state; total 2n+1 state.

9.b

Because DFA each state have determine result to next state by input. We need to know all symbols is even or odd in one state. So each symbols have two possible (even or odd) lead to state

9.c

10.a

prove are morphism

F-map

B-map

10.b

10.c

tags math linear algebra

linear algebra

matrix

| Column 1 | Column 2 | Column 3 | |

|---|---|---|---|

| Row1 | value | value | value |

| Row2 | value | value | value |

| Row3 | value | value | value |

diagonal 對角 commutative law associative law distributive law

sample

乘積

反矩陣

identity matrix

transpose matrix

inner change (change row)

math to matrix

reduced echelon form(gaussian elimination)

vector equation and matrix equation

span

linear dependence

linear depandence vs. span:

echelon form

vector equation

span R^4

NO,did not have pivot point in row3 and row4

linear independence?

NO,did not have pivot point in column 3

transform

one-to-one

onto

linear transformation

vectorspace

child space

null space

columns space

rows space

rank nullity

rank

the number of non-zero pivot column

nullity/kernel

the number of zero pivot column

Eigenvector and Eigenvalue

eigen value

eigen vector

diagonalization

orthogonal

dot

|A|

vector

matrix

orthogonal vs orthonormal

orthogonal matrix

orthonormal

find orth basis(gram-schmidt process)

derivative of a Matrix

projection

curve fitting | regression | normal equation

way 1

way 2

way 3

math-modeling

title: 循環賽

tags: Templates, Talk description: View the slide with “Slide Mode”.

循環賽

題目

下圖為五位選手的比賽結果 a->b表示 a 獲勝

矩陣

循環

循環

tags math matrix algebra

matrix algebra

multiplication

dot product

by columns

by row

by block

matrix form of gaussian elimination

actually the row reduce from the there are matrix multiplication as well

Subtracting 2 times the row of A from the row of A

for example

revert U to A

are elimination operation matrix

is Lower triangular matrix

is Upper triangular matrix

Symmetric matrix

projection matrix(project vector to columns space)

projection vector on vector

projection matrix

property

- symmetric

Pseudoinverse matrix

determinant

SVD

polar decomposition

tags math matrix algebra

matrix algebra

multiplication

dot product

by columns

by row

by block

matrix form of gaussian elimination

actually the row reduce from the there are matrix multiplication as well

Subtracting 2 times the row of A from the row of A

for example

revert U to A

are elimination operation matrix

is Lower triangular matrix

is Upper triangular matrix

Symmetric matrix

projection matrix(project vector to columns space)

projection vector on vector

projection matrix

property

- symmetric

Pseudoinverse matrix

determinant

SVD

polar decomposition

Q2

1

2

4

5

Q3

1

2

3

4

5

6

7

8

9

10

11

- (a) real eigenvalue

- (b) real part < 0

- (c) eigenvalue 1 or -1

- (d) eigenvalue =1

- (e)

- (f) eigenvalue =0

12

13

14

15

16

a

b

c

18

hw2 110590049

tags probability

problem 1

problem 2

problem 3

problem 4

problem 5

Evey level at least have one people leave so only 3 people can chose leave level freely

problem 6

(a)

(b)

(c)

problem 7

problem 8

hw2 110590049

tags probability

problem 1

problem 2

problem 3

problem 4

problem 5

Evey level at least have one people leave so only 3 people can chose leave level freely

problem 6

(a)

(b)

(c)

problem 7

problem 8

hw3 110590049

tags probability

1.a

1.b

2

3

4

5

6

7.a

7.b

8

9

10

11

hw4 110590049

tags probability

1

2

3

4

5

6

7

hw5 110590049

tags probability

1

2

3

4

5

6

7

8

9

hw6 110590049

tags probability

1

2

3

4

5

6

7

8

9

10

hw7 110590049

tags probability

1.a

1.b

2.a

2.b

3

4

5

6

hw8 110590049

tags probability

1

2

3

4

5

6

7

8

9

hw9 110590049

tags probability

1.a

1.b

1.c

1.d

2

3.a

3.b

3.c

3.d

3.e

4

5

6

7.a

7.b

7.c

SIGNALS and SYSTEMS

CT vs DT

CT(continuous-time)

For a continuous-time (CT) signal, the independent variable is

always enclosed by a parenthesis

Example:

DT(discrete-time)

For a discrete-time (DT) signal, the independent variable is always

enclosed by a brackets

Example:

Signal Energy and Power

Energy

average power

ex1

ex2

odd vs even

even

odd

some prove

unit step function and unit impulse function

| unit step | unit impules | |

|---|---|---|

| discrcte | ||

| continouse | ||

basic system properties

memory and memoryless

memoryless systems

only the current signal

memory systems

only the current signal

invertibility

function is invertable

causality

only the current and past signal are relate then it is causal system

causal systems

non-causal systems

stability (BIBO stable )

can find BIBO(bounded-input and bounded-output) in another word the function is diverage or not.

BIBO stable

BIBO unstable

time invariance

the function shift input will only shift and dont have any effect

example

linearity

if then is linearty

test

| memoryless | stable | causal | linaer | time invariant | |

|---|---|---|---|---|---|

| ✅ | ✅ | ✅ | ❌ | ✅ | |

| ✅ | ✅ | ✅ | ✅ | ❌ | |

| ❌ | ✅ | ❌ | ✅ | ❌ | |

| ❌ | ✅ | ❌ | ✅ | ✅ | |

| ❌ | ✅ | ❌ | ✅ | ❌ | |

| ✅ | ✅ | ✅ | ✅ | ✅ |

complex plane

exponential signal & sinusoidal signal

| C is real | C is complex | |

|---|---|---|

| a is real | ||

| a is imaginary | ||

| a is complex |

periods

CT

example

DT

fundamental period is integer that for all integer

have to be integer.

not every “sinusoidal signal” have

example

convolution

CT

DT

| h\x | x[n] | 0 | 1 | 2 | 3 | 3 |

|---|---|---|---|---|---|---|

| h[n] | 1 | 0.5 | 0.25 | 0.125 | 0.0625 | |

| 0 | 1 | 1 | 0.5 | 0.25 | 0.125 | 0.0625 |

| 1 | 0.5 | 0.5 | 0.25 | 0.125 | 0.0625 | 0.03125 |

| 2 | 0.25 | 0.5 | 0.125 | 0.0625 | 0.03125 | 0.015625 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 |

commutative

distributive

associative

LTI(Linear Time-Invariant)

Linear

Time-invariant

LTI systems and convolution

stability for LTI Systems

Unit Step Response of an LTI System

CT

DT

eigen function and eigen value of LTI systems

The response of an LTI system to a eigen function is the same eigen function with only a change in amplitude(eigen value)

The Response of LTI Systems to Complex Exponential Signals

example

system

delay system

difference system

accumulation system

example

SIGNALS and SYSTEMS

CT vs DT

CT(continuous-time)

For a continuous-time (CT) signal, the independent variable is

always enclosed by a parenthesis

Example:

DT(discrete-time)

For a discrete-time (DT) signal, the independent variable is always

enclosed by a brackets

Example:

Signal Energy and Power

Energy

average power

ex1

ex2

odd vs even

even

odd

some prove

unit step function and unit impulse function

| unit step | unit impules | |

|---|---|---|

| discrcte | ||

| continouse | ||

basic system properties

memory and memoryless

memoryless systems

only the current signal

memory systems

only the current signal

invertibility

function is invertable

causality

only the current and past signal are relate then it is causal system

causal systems

non-causal systems

stability (BIBO stable )

can find BIBO(bounded-input and bounded-output) in another word the function is diverage or not.

BIBO stable

BIBO unstable

time invariance

the function shift input will only shift and dont have any effect

example

linearity

if then is linearty

test

| memoryless | stable | causal | linaer | time invariant | |

|---|---|---|---|---|---|

| ✅ | ✅ | ✅ | ❌ | ✅ | |

| ✅ | ✅ | ✅ | ✅ | ❌ | |

| ❌ | ✅ | ❌ | ✅ | ❌ | |

| ❌ | ✅ | ❌ | ✅ | ✅ | |

| ❌ | ✅ | ❌ | ✅ | ❌ | |

| ✅ | ✅ | ✅ | ✅ | ✅ |

complex plane

exponential signal & sinusoidal signal

| C is real | C is complex | |

|---|---|---|

| a is real | ||

| a is imaginary | ||

| a is complex |

periods

CT

example

DT

fundamental period is integer that for all integer

have to be integer.

not every “sinusoidal signal” have

example

convolution

CT

DT

| h\x | x[n] | 0 | 1 | 2 | 3 | 3 |

|---|---|---|---|---|---|---|

| h[n] | 1 | 0.5 | 0.25 | 0.125 | 0.0625 | |

| 0 | 1 | 1 | 0.5 | 0.25 | 0.125 | 0.0625 |

| 1 | 0.5 | 0.5 | 0.25 | 0.125 | 0.0625 | 0.03125 |

| 2 | 0.25 | 0.5 | 0.125 | 0.0625 | 0.03125 | 0.015625 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 |

commutative

distributive

associative

LTI(Linear Time-Invariant)

Linear

Time-invariant

LTI systems and convolution

stability for LTI Systems

Unit Step Response of an LTI System

CT

DT

eigen function and eigen value of LTI systems

The response of an LTI system to a eigen function is the same eigen function with only a change in amplitude(eigen value)

The Response of LTI Systems to Complex Exponential Signals

example

system

delay system

difference system

accumulation system

example

signals and systems mid

2

odd signal of is

3

a

odd signal

b

odd signal

even signal

product of and is still are odd signal since

c

4

| linear | time invariant | |

|---|---|---|

| no | no | |

| yes | yes | |

| yes | no |

5

| period | |

|---|---|

6

| memoryless | time invariant | linear | causal | stable | |

|---|---|---|---|---|---|

| ❌ | ✅ | ✅ | ✅ | ✅ | |

| ❌ | ✅ | ✅ | ✅ | ✅ | |

| ✅ | ✅ | ❌ | ✅ | ✅ | |

| ❌ | ✅ | ❌ | ✅ | ✅ |

signals and systems final

1

2

3

3.a

3.b

yes

3.c

yes

4

4.a

computer science

compiler

lexical analysis

follow

first

nullity

regex to DFA

parser

arithmetic expressions

example

look like this

Derivation

context free grammar

The language defined by a context-free grammar

ambiguous grammar

give int + int * int

left derivation

right derivation

Non-ambiguous grammar

give int + int * int * int

bottom-up parsing

- scan the input from left to right

- look for right-hand sides of production rules to build the derivation tree from bottom to top

LR parsing (Knuth, 1965)

Using an automaton and considering the first k tokens of the input;

this is called LR(k) analysis (LR means “Left to right scanning, Rightmost derivation”)

LR table

example

Construction of the automation and the table

NULL

Let .

holds if and only if we can derive from , i.e.,

FIRST

Let .

is the set of all terminals starting words derived from , i.e.,

FOLLOW

Let .

is the set of all terminals that may appear after in a derivation, i.e.,

compiler

lexical analysis

follow

first

nullity

regex to DFA

parser

arithmetic expressions

example

look like this

Derivation

context free grammar

The language defined by a context-free grammar

ambiguous grammar

give int + int * int

left derivation

right derivation

Non-ambiguous grammar

give int + int * int * int

bottom-up parsing

- scan the input from left to right

- look for right-hand sides of production rules to build the derivation tree from bottom to top

LR parsing (Knuth, 1965)

Using an automaton and considering the first k tokens of the input;

this is called LR(k) analysis (LR means “Left to right scanning, Rightmost derivation”)

LR table

example

Construction of the automation and the table

NULL

Let .

holds if and only if we can derive from , i.e.,

FIRST

Let .

is the set of all terminals starting words derived from , i.e.,

FOLLOW

Let .

is the set of all terminals that may appear after in a derivation, i.e.,

hw0

(*print_endline "Hello, World!"*)

let width=32;;

let height=16;;

(* Exercise 1 *)

(* Exercise 1.a*)

let rec fact n=

if n<=1 then

1

else

n*fact(n-1);;

let p_w= "1.a fact(5)="^ string_of_int (fact(5) );;

print_endline p_w;;

(* Exercise 1.b*)

let rec nb_bit_pos n=

if n=0 then

0

else if (n land 1 = 1) then

1 + nb_bit_pos( n lsr 1)

else

nb_bit_pos( n lsr 1);;

let p_w= "1.b nb_bit_pos(5)=" ^ string_of_int (nb_bit_pos(5) );;

print_endline p_w;;

(* Exercise 2*)

let fibo n=

let rec aux n a b=

if n=0 then

a

else

aux (n-1) b (a+b)

in

aux n 0 1

let p_w= "2. fibo(6)="^ string_of_int (fibo(6));;

print_endline p_w;;

(* Exercise 3 *)

(* Exercise 3.a *)

let palindrome m =

let len=String.length m in

let vaild= ref true in

for i = 0 to (len lsr 1) do

if String.get m i <> String.get m (len-1-i) then

vaild:=false

done;

!vaild

let p_w= "3.a palindrome('aba')="^ string_of_bool (palindrome("aba")) ;;

print_endline p_w;;

(* Exercise 3.b*)

let compare m1 m2 =

let len1=String.length m1 in

let len2=String.length m2 in

let rec check i=

if (i < len1) && (i < len2 )then

let c1 =String.get m1 i in

let c2 =String.get m2 i in

if c1 = c2 then

check(i+1)

else if c1 < c2 then

true

else

false

else if len1 == len2 && i == len1 then

false

else if i < len1 then

false

else

true

in

check 0

let p_w= "3.b compare('ab','abc')="^ string_of_bool (compare "ab" "abc") ;;

print_endline p_w;;

(* Exercise 3.c *)

let factor m1 m2 =

let len1=String.length m1 in

let len2=String.length m2 in

let vaild =ref false in

for i = 0 to len2-len1 do

let sub=String.sub m2 i len1 in

if sub = m1 then

vaild:=true

done;

!vaild

let p_w= "3.c factor('ab','abc')="^ string_of_bool (factor "ac" "abcdfg") ;;

print_endline p_w;;

(* Exercise 4 *)

let string_of_list l = "[" ^ (String.concat "," (List.map string_of_int l)) ^ "]"

let slice l start stop =

let rec aux i acc = function

| [] -> List.rev acc (* If list is empty, return the reversed accumulator *)

| hd :: tl ->

if i >= stop then List.rev acc (* Stop when index reaches 'stop' *)

else if i >= start then aux (i + 1) (hd :: acc) tl (* Add element to accumulator if within slice *)

else aux (i + 1) acc tl (* Continue without adding element *)

in

aux 0 [] l

let split l =

let len = (List.length l) in

let mid = len lsr 1

in

(slice l 0 mid, slice l mid len)

let merge l1 l2 =

let rec aux acc = function

| (h1::t1,h2::t2) ->

if h1 < h2 then

aux (h1::acc) (t1,h2::t2)

else

aux (h2::acc) (h1::t1,t2)

| (h1::t1,[]) -> aux (h1::acc) (t1,[])

| ([],h2::t2) -> aux (h2::acc) ([],t2)

| ([],[]) -> List.rev acc

in

aux [] (l1,l2)

let rec sort ll=

if List.length ll <=1 then

ll

else

let l,r =split ll in

let sl,sr=sort(l),sort(r) in

merge sl sr

let () =

let test_list = [1; 4; 3; 2; 5] in

let l,r =split test_list in

let p_w1= "4. split" ^ string_of_list (test_list) ^"=" ^ string_of_list (l) ^ ","^ string_of_list (r) in

let p_w2= "4. merge" ^ string_of_list (l) ^ ","^ string_of_list (r) ^ "=" ^string_of_list ( merge l r)in

let p_w3= "4. sort" ^ string_of_list (test_list) ^"=" ^ string_of_list (sort test_list) in

print_endline p_w1;

print_endline p_w2;

print_endline p_w3

(* Exercise 5.a *)

let pow m = m * m

let square_sum m =

List.fold_left (+) 0 (List.map pow m)

let () =

let test_list = [1; 2; 3] in

let p_w = "5.a square_sum = " ^ string_of_int (square_sum test_list) in

print_endline p_w

(* Exercise 5.b *)

let find_opt x l =

let rec aux i = function

| [] -> None

| hd :: tl ->

if hd = x then

Some i

else

aux (i + 1) tl (* Check if head equals x, otherwise recurse *)

in

aux 0 l

let find_opt x l=

let (return ,_) =List.fold_left (

fun (out,i) v ->

if v=x && out ==None then

(Some i,i+1)

else

(None,i+1)

)(None,0) l in

return

let unwrap = function

| Some c -> string_of_int c

| None -> "Not found"

let () =

let test_list = [1; 2; 3; 2] in

let result = find_opt 2 test_list in

let p_w = "5.b find_opt 2," ^ string_of_list test_list ^ " = " ^ unwrap result in

print_endline p_w

(* Exercise 6 *)

let rev l =

let rec rev_append acc l =

match l with

| [] -> acc

| h :: t -> rev_append (h :: acc) t

in

rev_append [] l

let map f l =

let rec aux acc l =

match l with

| [] -> List.rev acc (* Reverse the accumulator before returning *)

| h :: t -> aux (f h :: acc) t

in

aux [] l

let () =

(*

let test_list = List.init 1000001 (fun i -> i) in

let r1= rev test_list in

let r2= map (fun x -> x*2)test_list in

*)

let p_w1 = "6. rev ..." in

let p_w2 = "6. map ..." in

print_endline p_w1;

print_endline p_w2

(* Exercise 7 *)

type 'a seq =

| Elt of 'a

| Seq of 'a seq * 'a seq

let (@@) x y = Seq(x, y)

let rec hd x =

match x with

| Elt s -> s

| Seq(s1,s2) -> hd s1

let rec tl l=

let rec aux find ll =

match ll with

| Elt s -> raise (Failure "you entered an empty list")

| Seq (Elt s, Elt s1) ->

if find then

(false,Elt s1)

else

(false, Seq (Elt s, Elt s1))

| Seq (Elt s, s1) ->

if find then

(false,s1)

else

(false, Seq (Elt s, s1))

| Seq (s1, Elt s) ->

let find',ll=aux find s1 in

if find'=false then

(false,Seq (ll, Elt s) )

else

(false,Seq (s1, Elt s) )

| Seq (x1, x2 )->

let find',ll=aux find x1 in

if find'=false then

(false,Seq (ll,x2) )

else

(false,Seq (x1, x2 ))

in

let _,ll=aux true l in

ll

let rec mem v =function

| Elt s -> s=v

| Seq(s1,s2) -> ((mem v s1) || (mem v s2))

let rec rev =function

| Elt s -> Elt s

| Seq(s1,s2) -> Seq((rev s2),(rev s1))

let rec map f = function

| Elt s -> Elt (f s) (* Apply f to the single element *)

| Seq (s1, s2) -> Seq (map f s1, map f s2) (* Recursively map over both sub-sequences *)

let rec fold_left f acc = function

| Elt s -> f acc s (* Apply f to the accumulator and the single element *)

| Seq (s1, s2) ->

let acc' = fold_left f acc s1 in (* Fold over the first sub-sequence *)

fold_left f acc' s2 (* Fold over the second sub-sequence *)

let rec fold_right f seq acc =

match seq with

| Elt s -> f s acc (* Apply f to the single element and the accumulator *)

| Seq (s1, s2) -> fold_right f s1 (fold_right f s2 acc) (* Fold over both sub-sequences *)

let seq2list seq =

let rec aux acc = function

| Elt s -> s :: acc

| Seq (s1, s2) -> aux (aux acc s1) s2

in

List.rev (aux [] seq)

let find_opt x l=

let (return ,_) =fold_left (

fun (out,i) v ->

match out with

| None ->

if v=x then

(Some i,i+1)

else

(None,i+1)

| Some k ->

(out,i+1)

)(None,0) l in

return

let nth x l=

let (return ,_) =fold_left (

fun (out,i) v ->

match out with

| None ->

if i=x then

(Some v,i+1)

else

(None,i+1)

| Some k ->

(out,i+1)

)(None,0) l in

match return with

| None -> raise (Failure "out of range")

| Some v -> v

(*

let print_of_something = function

| Int s -> string_of_int s

| Elt s -> string_of_list [s]

| Seq (s1,s2)-> string_of_list (seq2list s1) ^ string_of_list (seq2list s2)

*)

let () =

let test_seq =

Seq(Seq (Elt 1,Elt 2),Seq (Seq (Elt 3,Elt 4),Elt 5)) in

let test_list= seq2list test_seq in

let p_w1 = "7. hd " ^ string_of_list test_list ^"=" ^ string_of_int (hd test_seq) in

let p_w2 = "7. tl " ^ string_of_list test_list ^"=" ^ string_of_list (seq2list (tl test_seq)) in

let p_w3 = "7. mem" ^ string_of_list test_list ^"=" ^ string_of_bool(mem 5 test_seq) in

let p_w4 = "7. rev" ^ string_of_list test_list ^"=" ^ string_of_list (seq2list ( rev test_seq)) in

let p_w5 = "7. map" ^ string_of_list test_list ^"=" ^ string_of_list (seq2list ( map (fun x -> x *2 )test_seq)) in

let p_w6 = "7. fold_right" ^ string_of_list test_list ^"=" ^ string_of_int( fold_left (+) 0 test_seq ) in

let p_w7 = "7. find_opt " ^ string_of_int 3 ^ string_of_list test_list ^"=" ^ (unwrap( find_opt 3 test_seq ) )in

let p_w8 = "7. nth " ^ string_of_int 3 ^ string_of_list test_list ^"=" ^ (string_of_int( nth 3 test_seq ) )in

print_endline p_w1;

print_endline p_w2;

print_endline p_w3;

print_endline p_w4;

print_endline p_w5;

print_endline p_w6;

print_endline p_w7;

print_endline p_w8

hw1

1

gcc -S exercise1.c -o exercise1.s

sh run.sh exercise1.s

2~5

sh run.sh exercise2.s

sh run.sh exercise3.s

sh run.sh exercise4.s

sh run.sh exercise5.s

sh run.sh exercise6.s

6

gcc -S exercise6.c -o exercise6.s

sh run.sh exercise6.s

7

gcc -S matrix.c -o matrix.s

sh run.sh matrix.s

run.sh

#!/bin/bash

# Check if file is provided

if [ -z "$1" ]; then

echo "Usage: $0 <assembly-file>"

exit 1

fi

# Set the input file

asm_file=$1

# Get the base filename without the extension

base_filename=$(basename "$asm_file" .s)

# Assemble

# nasm -f elf64 "$asm_file" -o "$base_filename.o"

as "$asm_file" -o "$base_filename.o"

if [ $? -ne 0 ]; then

echo "Error: Assembly failed"

exit 1

fi

# Link

gcc -no-pie "$base_filename.o" -o "$base_filename.bin"

if [ $? -ne 0 ]; then

echo "Error: Linking failed"

exit 1

fi

# Execute

./"$base_filename.bin"

1.

.file "exercise1.c"

.text

.section .rodata

.LC0:

.string "n = %d\n"

.text

.globl main

.type main, @function

main:

.LFB0:

.cfi_startproc

pushq %rbp

.cfi_def_cfa_offset 16

.cfi_offset 6, -16

movq %rsp, %rbp

.cfi_def_cfa_register 6

movl $42, %esi

leaq .LC0(%rip), %rax

movq %rax, %rdi

movl $0, %eax

call printf@PLT

movl $0, %eax

popq %rbp

.cfi_def_cfa 7, 8

ret

.cfi_endproc

.LFE0:

.size main, .-main

.ident "GCC: (GNU) 14.2.1 20240910"

.section .note.GNU-stack,"",@progbits

2.

.text

.globl main # make main visible for ld

main:

pushq %rbp # Save base pointer

movq %rsp, %rbp # Set up stack frame

# 4 + 6

movq $4, %rax # Load first operand into RAX

addq $6, %rax # 4 + 6

movq $msg, %rdi # First argument: format string

movq %rax, %rsi # Second argument: result

xorq %rax, %rax # Clear RAX (no floating point args)

call printf # Call printf

# 21 * 2

movq $21, %rax # Load first operand into RAX

imulq $2, %rax # 21 * 2

movq $msg, %rdi # First argument: format string

movq %rax, %rsi # Second argument: result

xorq %rax, %rax # Clear RAX

call printf # Call printf

# 4 + 7 / 2

movq $7, %rax # Load numerator into RAX

xorq %rdx, %rdx # Clear RDX for division

movq $2, %rcx # Load denominator into RCX

idivq %rcx # RAX = RAX / RCX (7 / 2)

addq $4, %rax # Add the result to 4

movq $msg, %rdi # First argument: format string

movq %rax, %rsi # Second argument: result

xorq %rax, %rax # Clear RAX

call printf # Call printf

# 3 - 6 * (10 / 5)

movq $10, %rax # Load numerator into RAX

movq $5, %rcx # Load denominator into RCX

idivq %rcx # RAX = RAX / RCX (10 / 5)

imulq $-6, %rax # RAX = RAX * (-6)

addq $3, %rax # Add 3 to the result

movq $msg, %rdi # First argument: format string

movq %rax, %rsi # Second argument: result

xorq %rax, %rax # Clear RAX (no floating point args)

call printf # Call printf

# Exit

movq $0, %rax # Return code 0

popq %rbp # Restore base pointer

ret # Return from main

.data

msg:

.string "n = %d\n" # Format string for printf

3.

.text

.globl main # make main visible for ld

main:

pushq %rbp # Save base pointer

movq %rsp, %rbp # Set up stack frame

# Evaluate true && false

movq $1, %rax # Load true (1) into RAX

andq $0, %rax # Perform logical AND with false (0)

cmpq $0, %rax # Compare result with 0 (false)

movq $false_msg, %rdi # Assume false by default

movq $true_msg, %rsi # Assume true

cmovne %rdi, %rsi # If result is not 0, use true message

call printf # Call printf

# Evaluate 3 == 4 ? 10 * 2 : 14

movq $3, %rax # Load 3 into RAX

cmpq $4, %rax # Compare RAX with 4

jne not_equal # Jump if not equal (3 != 4)

movq $14, %rax # Load result for else case (14)

jmp print_result # Jump to print result

not_equal:

movq $10, %rax # Load first operand (10)

imulq $2, %rax # Multiply by 2 (10 * 2)

print_result:

movq $int_msg, %rdi # Load format string for integer output

movq %rax, %rsi # Second argument: result (product or 14)

call printf # Call printf

# Evaluate 2 == 3 || 4 <= (2 * 3)

movq $3, %rax # Load 3 into RAX

cmpq $2, %rax # Compare 2 with 3

jne check_second_condition # Jump if 2 != 3

movq $1, %rbx # If 2 == 3, set RBX to true (1)

jmp finish # Jump to finish

check_second_condition:

movq $2, %rcx # Load 2 into RCX

imulq $3, %rcx # Multiply 2 * 3 (result: 6)

movq $4, %rax # Load 4 into RAX

cmpq %rcx, %rax # Compare 4 with 6

jbe less_or_equal # Jump if 4 <= 6

movq $0, %rbx # If not, set RBX to false (0)

jmp finish # Jump to finish

less_or_equal:

movq $1, %rbx # If 4 <= 6, set RBX to true (1)

finish:

cmpq $0, %rbx # Compare RBX with 0 (false)

movq $false_msg, %rdi # Assume false by default

movq $true_msg, %rsi # Assume true

cmovne %rsi, %rdi # If RBX is not zero (true), use true message

call printf # Call printf

# Exit program properly

movq $0, %rax # Return code 0

popq %rbp # Restore base pointer

ret # Return from main

.data

false_msg:

.string "false\n" # String for false output

true_msg:

.string "true\n" # String for true output

int_msg:

.string "%d\n" # Format string for integer output

4.

.text

.globl main # Make main visible for the linker

main:

pushq %rbp # Save base pointer

movq %rsp, %rbp # Set up stack frame

# Load x from memory

movq x(%rip), %rax # Move the value of x (2) into RAX

# y = x * x

imulq %rax, %rax # Multiply x by itself (RAX = RAX * RAX, now RAX = 4)

# Store the result of y in memory

movq %rax, y(%rip) # Move the result (y = 4) into the y variable

# Load x and y from memory

movq x(%rip), %rax # Load x (2) back into RAX

addq y(%rip), %rax # Add y (4) to RAX (RAX = 4 + 2 = 6)

# Prepare for the printf call

movq $result_msg, %rdi # Load the format string into RDI

movq %rax, %rsi # Move the result (6) into RSI

xorq %rax, %rax # Clear RAX for calling printf

call printf # Call printf to print the result

# Exit the program

movq $60, %rax # syscall: exit

xorq %rdi, %rdi # status: 0

syscall # invoke syscall

popq %rbp # Restore base pointer

ret # Return from main

.data # Data section

x: .quad 2 # Allocate x in the data segment, initialized to 2

y: .quad 0 # Allocate y in the data segment, initialized to 0

result_msg: .string "%d\n" # Format string for printf

5

.data

result_msg: .string "%d\n" # Format string for printf

.text

.globl main # Make main visible for the linker

main:

pushq %rbp # Save base pointer

movq %rsp, %rbp # Set up stack frame

### First Instruction: print (let x = 3 in x * x)

# Allocate space for x on the stack

subq $8, %rsp # Reserve 8 bytes on the stack for x

movq $3, (%rsp) # Set x = 3 (store on stack)

# x * x

movq (%rsp), %rax # Load x into RAX

imulq %rax, %rax # Multiply x by itself (RAX = x * x)

# Prepare for the printf call (result is 9)

movq $result_msg, %rdi # Load the format string into RDI

movq %rax, %rsi # Move the result (9) into RSI

xorq %rax, %rax # Clear RAX for calling printf

call printf # Call printf to print the result

# Deallocate space for x

addq $8, %rsp # Free the space allocated for x on the stack

### Second Instruction: print (let x = 3 in ...)

# Allocate space for x on the stack

subq $8, %rsp # Reserve 8 bytes on the stack for x

movq $3, (%rsp) # Set x = 3 (store on stack)

# Inner block: let y = x + x in x * y

subq $8, %rsp # Reserve 8 bytes on the stack for y

movq 8(%rsp), %rax # Load x into RAX (from previous stack slot)

addq %rax, %rax # y = x + x (RAX = x + x)

movq %rax, (%rsp) # Store y on the stack 3

movq 8(%rsp), %rax # Load x into RAX again (from previous stack slot)

imulq (%rsp), %rax # Multiply x * y (RAX = x * y)

movq %rax, (%rsp) # Store y on the stack 18

# Inner block: let z = x + 3 in z / z

subq $8, %rsp # Reserve 8 bytes on the stack for z

movq 16(%rsp), %rcx # Load x into RCX (from previous stack slot)

addq $3, %rcx # z = x + 3 (RCX = x + 3)

movq %rcx, (%rsp) # Store z on the stack

xorq %rdx, %rdx # Clear RDX (for division)

movq (%rsp), %rax # Load z into RAX (z = x + 3)

idivq %rax # z / z (RAX = z / z, which is 1)

# Compute (x * y) + (z / z)

addq %rax, 8(%rsp) # Add z / z (which is 1) to x * y (from earlier)

# Prepare for the printf call (result is 19)

movq $result_msg, %rdi # Load the format string into RDI

movq 8(%rsp), %rsi # Load the result (19) into RSI

xorq %rax, %rax # Clear RAX for calling printf

call printf # Call printf to print the result

# Deallocate space for z, y, and x

addq $24, %rsp # Free the space allocated for z, y, and x

### Exit the program

movq $60, %rax # syscall: exit

xorq %rdi, %rdi # status: 0

syscall # invoke syscall

popq %rbp # Restore base pointer

ret # Return from main

6

#include <stdio.h>

int isqrt(int n)

{

int c = 0, s = 1;

// x^2 = (x-1)^2 + 2(x-1) + 1

do

{

// Increment c and calculate s in one line

s += (++c << 1) + 1;

} while (s <= n); // Single branching instruction here

return c;

}

int main()

{

int n;

for (n = 0; n <= 20; n++)

printf("sqrt(%2d) = %2d\n", n, isqrt(n));

return 0;

}

hw2

open Ast

open Format

(* Exception raised to signal a runtime error *)

exception Error of string

let error s = raise (Error s)

(* Values of Mini-Python.

Two main differences wrt Python:

- We use here machine integers (OCaml type `int`) while Python

integers are arbitrary-precision integers (we could use an OCaml

library for big integers, such as zarith, but we opt for simplicity

here).

- What Python calls a ``list'' is a resizeable array. In Mini-Python,

there is no way to modify the length, so a mere OCaml array can be used.

*)

type value =

| Vnone

| Vbool of bool

| Vint of int

| Vstring of string

| Vlist of value array

(* Print a value on standard output *)

let rec print_value = function

| Vnone -> printf "None"

| Vbool true -> printf "True"

| Vbool false -> printf "False"

| Vint n -> printf "%d" n

| Vstring s -> printf "%s" s

| Vlist a ->

let n = Array.length a in

printf "[";

for i = 0 to n-1 do print_value a.(i); if i < n-1 then printf ", " done;

printf "]"

(* Boolean interpretation of a value

In Python, any value can be used as a Boolean: None, the integer 0,

the empty string, and the empty list are all considered to be

False, and any other value to be True.

*)

let is_false v = match v with

| Vnone

| Vbool false

| Vstring ""

| Vlist [||] -> true

| Vint n -> n = 0

| _ -> false

let is_true v = not (is_false v)

(* We only have global functions in Mini-Python *)

let functions = (Hashtbl.create 16 : (string, ident list * stmt) Hashtbl.t)

(* The following exception is used to interpret `return` *)

exception Return of value

(* Local variables (function parameters and local variables introduced

by assignments) are stored in a hash table that is passed to the

following OCaml functions as parameter `ctx`. *)

type ctx = (string, value) Hashtbl.t

(* Interpreting an expression (returns a value) *)

let compare op n1 n2 = match n1 , n2 with

| int ,_->

match op with

| Beq -> n1 = n2

| Bneq -> n1 <> n2

| Blt -> n1 < n2

| Ble -> n1 <= n2

| Bgt -> n1 > n2

| Bge -> n1 >= n2

| _ -> raise (Error "unsupported operand types")

let rec expr ctx = function

| Ecst Cnone ->

Vnone

| Ecst (Cbool b) ->

Vbool(b)

| Ecst (Cstring s) ->

Vstring s

| Ecst (Cint n) ->

Vint (Int64.to_int n)

(* arithmetic *)

| Ebinop (Badd | Bsub | Bmul | Bdiv | Bmod |

Beq | Bneq | Blt | Ble | Bgt | Bge as op, e1, e2) ->

let v1 = expr ctx e1 in

let v2 = expr ctx e2 in

begin match op, v1, v2 with

(* int *)

| Badd, Vint n1, Vint n2 -> Vint (n1 + n2)

| Bsub, Vint n1, Vint n2 -> Vint (n1 - n2)

| Bmul, Vint n1, Vint n2 -> Vint (n1 * n2)

| Bdiv, Vint n1, Vint n2 -> Vint (n1 / n2)

| Bmod, Vint n1, Vint n2 -> Vint (n1 mod n2)

(* string *)

| Badd, Vstring n1, Vstring n2 -> Vstring (String.cat n1 n2)

(* bool *)

| Beq, _, _ -> Vbool (compare Beq v1 v2)

| Bneq, _, _ -> Vbool (compare Bneq v1 v2)

| Blt, _, _ -> Vbool (compare Blt v1 v2)

| Ble, _, _ -> Vbool (compare Ble v1 v2)

| Bgt, _, _ -> Vbool (compare Bgt v1 v2)

| Bge, _, _ -> Vbool (compare Bge v1 v2)

(*

| Badd, Vlist l1, Vlist l2 ->

assert false (* TODO (question 5) *)

*)

| _ -> error "unsupported operand types"

end

| Eunop (Uneg, e1) ->

Vint ( match expr ctx e1 with

| Vint v -> - v

| _ -> error "unsupported operand type")

(* Boolean *)

| Ebinop (Band, e1, e2) ->

let v1 = expr ctx e1 in

if is_true v1

then expr ctx e2

else v1

| Ebinop (Bor, e1, e2) ->

let v1 = expr ctx e1 in

if is_true v1

then v1

else

expr ctx e2

| Eunop (Unot, e1) ->

Vbool ( match expr ctx e1 with

| Vbool b -> not b

| _ -> error "unsupported operand type in 'not'")

| Eident {id} ->

Hashtbl.find ctx id

(* function call *)

| Ecall ({id="len"}, [e1]) ->

begin match expr ctx e1 with

| Vstring s -> Vint (String.length s)

| Vlist l -> Vint (Array.length l)

| _ -> error "this value has no 'len'" end

| Ecall ({id="list"}, [Ecall ({id="range"}, [e1])]) ->

let n = expr ctx e1 in

Vlist (match n with

| Vint n -> Array.init n (fun i -> Vint i)

| _ -> error "unsupported operand type in 'list'")

| Ecall ({id=f}, el) ->

if not (Hashtbl.mem functions f) then error ("unbound function " ^ f);

let args, body = Hashtbl.find functions f in

if List.length args <> List.length el then error "bad arity";

let ctx' = Hashtbl.create 16 in

List.iter2 (fun {id=x} e -> Hashtbl.add ctx' x (expr ctx e)) args el;

begin try stmt ctx' body; Vnone with Return v -> v end

| Elist el ->

Vlist (Array.of_list (List.map (expr ctx) el))

| Eget (e1, e2) ->

match expr ctx e2 with

| Vint i ->

begin match expr ctx e1 with

| Vlist l ->

if i < 0 || i >= Array.length l then error "index out of bounds"

else l.(i)

| _ -> error "list expected" end

| _ -> error "integer expected"

(* Interpreting a statement

returns nothing but may raise exception `Return` *)

and expr_int ctx e = match expr ctx e with

| Vbool false -> 0

| Vbool true -> 1

| Vint n -> n

| _ -> error "integer expected"

and stmt ctx = function

| Seval e ->

ignore (expr ctx e)

| Sprint e ->

print_value (expr ctx e); printf "@."

| Sblock bl ->

block ctx bl

| Sif (e, s1, s2) ->

if is_true(expr ctx e) then

stmt ctx s1

else

stmt ctx s2

| Sassign ({id}, e1) ->

Hashtbl.replace ctx id (expr ctx e1)

| Sreturn e ->

raise (Return (expr ctx e))

| Sfor ({id=x}, e, s) ->

begin match expr ctx e with

| Vlist l ->

Array.iter (fun v -> Hashtbl.replace ctx x v; stmt ctx s) l

| _ -> error "list expected" end

| Sset (e1, e2, e3) ->

match expr ctx e1 with

| Vlist l ->

let index= expr_int ctx e2 in

l.(index)<- expr ctx e3

| _ -> error "list expected"

(* Interpreting a block (a sequence of statements) *)

and block ctx = function

| [] -> ()

| s :: sl -> stmt ctx s; block ctx sl

(* Interpreting a file

- `dl` is a list of function definitions (see type `def` in ast.ml)

- `s` is a statement (the toplevel code)

*)

let file (dl, s) =

List.iter

(fun (f,args,body) -> Hashtbl.add functions f.id (args, body)) dl;

stmt (Hashtbl.create 16) s

hw3

run.sh

ocaml HW3.ml

dot -Tpdf autom2.dot > autom.pdf && firefox autom.pdf

ocamlopt a.ml lexer.ml && ./a.out

hw3

type ichar = char * int

type regexp =

| Epsilon

| Character of ichar

| Union of regexp * regexp

| Concat of regexp * regexp

| Star of regexp

(*

Exercise 1: Nullity of a regular expression

val null : regexp -> bool

*)

let rec null a =

match a with

| Epsilon ->

true

| Character (c,v) ->

false

| Union (r1,r2) ->

null(r1) || null(r2)

| Concat (r1,r2) ->

null(r1) && null(r2)

| Star r1 ->

true

let () =

let a = Character ('a', 0) in

assert (not (null a));

assert (null (Star a));

assert (null (Concat (Epsilon, Star Epsilon)));

assert (null (Union (Epsilon, a)));

assert (not (null (Concat (a, Star a))))

let () = print_endline "🎉✅ Exercise 1: tests passed successfully!"

(*

Exercise 2: The first and the last

*)

module Cset = Set.Make(struct type t = ichar let compare = Stdlib.compare end)

(*

val first : regexp -> Cset.t

*)

let rec first r =

match r with

| Epsilon -> Cset.empty (* Epsilon has no characters *)

| Character c -> Cset.singleton c (* The character itself is the first *)

| Union (r1, r2) -> Cset.union (first r1) (first r2)

| Concat (r1, r2) -> if null r1 then Cset.union (first r1) (first r2)

else first r1

| Star r1 -> first r1 (* Star can repeat, but first is just the first of the repeated expression *)

(*

val last : regexp -> Cset.t

*)

let rec last r =

match r with

| Epsilon -> Cset.empty (* Epsilon has no characters *)

| Character c -> Cset.singleton c (* The character itself is the first *)

| Union (r1, r2) -> Cset.union (last r1) (last r2)

| Concat (r1, r2) -> if null r2 then Cset.union (last r1) (last r2)

else last r2

| Star r1 -> last r1 (* Star can repeat, but first is just the first of the repeated expression *)

let () =

let ca = ('a', 0) and cb = ('b', 0) in

let a = Character ca and b = Character cb in

let ab = Concat (a, b) in

let eq = Cset.equal in

assert (eq (first a) (Cset.singleton ca));

assert (eq (first ab) (Cset.singleton ca));

assert (eq (first (Star ab)) (Cset.singleton ca));

assert (eq (last b) (Cset.singleton cb));

assert (eq (last ab) (Cset.singleton cb));

assert (Cset.cardinal (first (Union (a, b))) = 2);

assert (Cset.cardinal (first (Concat (Star a, b))) = 2);

assert (Cset.cardinal (last (Concat (a, Star b))) = 2)

let () = print_endline "🎉✅ Exercise 2: tests passed successfully!"

(*

Exercise 3: The follow

*)

let print x =

match x with

| (c,v) -> print_endline (Char.escaped c ^ " " ^ string_of_int v)

let print_set pre x =

(* Cset.iter (fun (c,v) -> print_string (Char.escaped c ^ " " ^ string_of_int v ^ "\n")) x *)

print_endline pre;

Cset.iter (fun (c,v) -> print_string ( pre ^ " " ^ Char.escaped c ^ " " ^ string_of_int v ^ "\n")) x

(* let ()=print_string "\n" *)

(* val follow : ichar -> regexp -> Cset.t *)

let rec follow ic r =

match r with

| Epsilon ->

Cset.empty (* Epsilon has no characters *)

| Character (c,v) ->

Cset.empty

| Union (r1, r2) ->

Cset.union (follow ic r1) (follow ic r2)

| Concat (r1, r2) ->

let x= last(r1) in

if Cset.mem ic x then

Cset.union (Cset.union (follow ic r1 ) (follow ic r2 )) (first r2 )

else

Cset.union (follow ic r1 ) (follow ic r2 )

| Star r1 ->

let x= last(r1) in

if Cset.mem ic x then

Cset.union (follow ic r1 ) (first r1 )

else

follow ic r1

let () =

let ca = ('a', 0) and cb = ('b', 0) in

let a = Character ca and b = Character cb in

let ab = Concat (a, b) in

assert (Cset.equal (follow ca ab) (Cset.singleton cb));

assert (Cset.is_empty (follow cb ab));

let r = Star (Union (a, b)) in

assert (Cset.cardinal (follow ca r) = 2);

assert (Cset.cardinal (follow cb r) = 2);

let r2 = Star (Concat (a, Star b)) in

assert (Cset.cardinal (follow cb r2) = 2);

let r3 = Concat (Star a, b) in

assert (Cset.cardinal (follow ca r3) = 2)

let () = print_endline "🎉✅ Exercise 3: tests passed successfully!"

(* Exercise 4: Construction of the automaton *)

type state = Cset.t (* a state is a set of characters *)

module Cmap = Map.Make(Char) (* dictionary whose keys are characters *)

module Smap = Map.Make(Cset) (* dictionary whose keys are states *)

type autom = {

start : state;

trans : state Cmap.t Smap.t (* state dictionary -> (character dictionary ->state) *)

}

(* cmap[c]=state *)

(* smap[state]=cmap *)

let map1= Cmap.add 'a' Cset.empty Cmap.empty

let map2= Smap.add Cset.empty map1 Smap.empty

(* val next_state : regexp -> Cset.t -> char -> Cset.t *)

(* val next_state : regexp -> state -> char -> state *)

let rec next_state r s c =

(* Combine the first sets of states reachable from s via character c

according to the transitions defined by r. *)

let reachable_states =

Cset.fold (fun (ch, v) acc ->

(* print_endline (Char.escaped ch ^ " " ^ string_of_int v ^ " " ^ Char.escaped c); *)

if ch = c then

(* let ()= print_set "follow" (follow (ch, v) r) in *)

(* let f=(follow (ch, v) r) in *)

Cset.union acc (follow (ch, v) r)

else

acc

) s Cset.empty

in

reachable_states

let eof = ('#', -1)

(* val make_dfa : regexp -> autom *)

let make_dfa (r:regexp) =

let r = Concat (r, Character eof) in

(* let k = Epsilon in *)

(* let r = Concat (r,k ) in *)

let trans = ref Smap.empty in

let rec transitions q =

let find_all_next_state =

Cset.fold (fun (c, v) map ->

let q' = next_state r q c in

Cmap.add c q' map

) q Cmap.empty

in

trans := Smap.add q find_all_next_state !trans;

(* Process the newly added states in transitions map *)

Cmap.iter (fun c q' ->

(* the state that not find yet *)

if not (Smap.mem q' !trans) then

transitions q'

) find_all_next_state;

in

let q0 = first r in

transitions q0;

{ start = q0; trans = !trans }

let fprint_state fmt q =

Cset.iter (fun (c,i) ->

if c = '#' then Format.fprintf fmt "# " else Format.fprintf fmt "%c%i " c i) q

let fprint_transition fmt q c q' =

Format.fprintf fmt "\"%a\" -> \"%a\" [label=\"%c\"];@\n"

fprint_state q

fprint_state q'

c

let fprint_autom fmt a =

Format.fprintf fmt "digraph A {@\n";

Format.fprintf fmt " @[\"%a\" [ shape = \"rect\"];@\n" fprint_state a.start;

Smap.iter

(fun q t -> Cmap.iter (fun c q' -> fprint_transition fmt q c q') t)

a.trans;

Format.fprintf fmt "@]@\n}@."

let save_autom file a =

let ch = open_out file in

Format.fprintf (Format.formatter_of_out_channel ch) "%a" fprint_autom a;

close_out ch

let () = print_endline "🎉✅ Exercise 4: tests passed successfully!"

(* Exercise 5: Word recognition *)

(* val recognize : autom -> string -> bool *)

let recognize (a:autom) (s:string) =

(* let str_with_eof = s ^ "#" in *)

let str_with_eof = s in

let final_state,accpet = String.fold_left (fun (q,accpet) c ->

if Cset.is_empty q then

(* accpet or out of trans *)

(q,accpet)

else

let cmap = Smap.find q a.trans in

if Cmap.mem c cmap then

let q' = Cmap.find c cmap in

(* let () = print_endline (Char.escaped c) in

let () = print_set "final" q' in

let _ =print_endline (string_of_bool( Cset.is_empty q' ))in *)

(q', Cset.mem eof q')

else

(*out of trans *)

(Cset.empty,false)

) (a.start,Cset.mem eof a.start) str_with_eof in

accpet

(* (a|b)*a(a|b) *)

let r = Concat (Star (Union (Character ('a', 1), Character ('b', 1))),

Concat (Character ('a', 2),

Union (Character ('a', 3), Character ('b', 2))))

let a = make_dfa r

let () = save_autom "autom.dot" a

(* positive test *)

let () = assert (recognize a "aa")

let () = assert (recognize a "ab")

let () = assert (recognize a "abababaab")

let () = assert (recognize a "babababab")

let () = assert (recognize a (String.make 1000 'b' ^ "ab"))

(* neg test *)

let () = assert (not (recognize a ""))

let () = assert (not (recognize a "a"))

let () = assert (not (recognize a "b"))

let () = assert (not (recognize a "ba"))

let () = assert (not (recognize a "aba"))

let () = assert (not (recognize a "abababaaba"))

let r = Star (Union (Star (Character ('a', 1)),

Concat (Character ('b', 1),

Concat (Star (Character ('a',2)),

Character ('b', 2)))))

let a = make_dfa r

let () = save_autom "autom2.dot" a

let () = assert (recognize a "")

let () = assert (recognize a "bb")

let () = assert (recognize a "aaa")

let () = assert (recognize a "aaabbaaababaaa")

let () = assert (recognize a "bbbbbbbbbbbbbb")

let () = assert (recognize a "bbbbabbbbabbbabbb")

let () = assert (not (recognize a "b"))

let () = assert (not (recognize a "ba"))

let () = assert (not (recognize a "ab"))

let () = assert (not (recognize a "aaabbaaaaabaaa"))

let () = assert (not (recognize a "bbbbbbbbbbbbb"))

let () = assert (not (recognize a "bbbbabbbbabbbabbbb"))

let () = print_endline "🎉✅ Exercise 5: tests passed successfully!"

(* Exercise 6: Generating a lexical analyzer *)

type buffer = { text: string; mutable current: int; mutable last: int }

let next_char b =

if b.current = String.length b.text then raise End_of_file;

let c = b.text.[b.current] in

b.current <- b.current + 1;

c

type gen_state={

id: int ;

trans:state Cmap.t Smap.t;

}

(* val generate: string -> autom -> unit *)

let generate (filename: string) (a: autom) =

let channel = open_out filename in

try

let state_id = ref Smap.empty in

let count = ref 0 in

(* Write formatted content to the file *)

Printf.fprintf channel "%s" "type buffer = { text: string; mutable current: int; mutable last: int }\n";

Printf.fprintf channel "%s" "let next_char b =

if b.current = String.length b.text then raise End_of_file;

let c = b.text.[b.current] in

b.current <- b.current + 1;

c\n";

let update_state_id (q : state) =

if not (Smap.mem q !state_id) then (

state_id := Smap.add q !count !state_id;

count := !count + 1;

);

Smap.find q !state_id

in

let gen_state = Smap.fold (fun k v s ->

let i=update_state_id k in

(* first state *)

let prefix_string = Format.asprintf (if i = 0 then "let rec state%i b =\n" else "and state%i b =\n") i in

(* accept state *)

let accept = Cset.mem eof k in

(* let prefix_string =if accept then prefix_string ^ " print_endline ((string_of_int b.last)^(string_of_int b.current));\n" else prefix_string in *)

if accept then

let prefix_string =if accept then prefix_string ^ " b.last <- b.current;\n" else prefix_string in

let s'=s^(prefix_string)^"\n"in

s'

else

let prefix_string = prefix_string ^ " let c = next_char b in\n" in

let prefix_string = prefix_string ^ " match c with \n" in

let match_string = Cmap.fold (fun c q s' ->

let q_id = update_state_id q in

let s'' = Format.asprintf " | '%c' -> state%i b\n" c q_id in

s' ^ s''

) v "" in

let match_string = match_string ^ " | _ -> raise (Failure \"undefine key in state\")\n" in

let state_string=prefix_string ^ match_string in

let s'=s^(state_string)^"\n"in

s'

) a.trans "" in

let start_string = Format.asprintf "let start = state%i\n" (Smap.find a.start !state_id)in

let gen_state = gen_state ^ start_string in

Printf.fprintf channel "%s" gen_state;

close_out channel

with e ->

(* Ensure the file is closed if an error occurs *)

close_out_noerr channel;

raise e

(* a*b *)

let r3 = Concat (

Star (Character ('a', 1)),

Character ('b', 1)

)

let a = make_dfa r3

let () = save_autom "autom2.dot" a

let () = generate "a.ml" a

let () = print_endline "🎉✅ Exercise 6: tests passed successfully!"

lexer.ml

(* type buffer = { text: string; mutable current: int; mutable last: int } *)

type buffer = A.buffer

let () =

let str = "abbaaab" in

(* let (b: ref A.buffer) = ref { text = str; current = 0; last = -1 } in *)

let (b: A.buffer) = { text = str; current = 0; last = -1 } in

let flag = ref true in

let start = A.start in

while !flag do

try

let last = if b.last = -1 then 0 else b.last in

let () = start b in

(* print_endline ( "last: "^(string_of_int b.last) ^" current: "^(string_of_int b.current)); *)

if b.last != -1 then

(* print_endline ( (string_of_int last) ^" "^(string_of_int b.last)); *)

let ac_string=String.sub b.text (last) (b.last-last) in

print_endline ("---> "^ac_string)

;

with e ->

print_endline (Printexc.to_string e);

match Printexc.to_string e with

| "Failure \"undefine key in state\"" -> flag := false

| "End_of_file" -> flag := false

| _ -> ()

done

computer-graphics

Deferred Rendering

old way

The old render pipeline used to directly transport the rendered pixel results to the screen, without providing a way to save the rendered image.

Before using Deferred Rendering, we used to calculate the mesh information required for lighting in one shader. This method is efficient when there is a constant number of lights in the scene, as we only need to run one shader for each model to properly apply lighting.

But we have 2 defect

- The number of lights in the shader needs to be constant because the shader has to be compiled at runtime for each frame.

- The same information used to calculate lighting for a mesh (such as mesh normal and mesh color) cannot be shared with another shader, such as a post-processing shader. Therefore, the same information needs to be recalculated in each shader that uses it.

framebuffer

The frame buffer is a hardware implementation in the GPU that can be used as a temporary buffer to store the rendered results. It can also save the results back to the texture memory in the GPU.

With the frame buffer, we can render the information that we will use multiple times and reuse it from the texture memory, rather than recalculating it each time.

deferred lighting

shadow

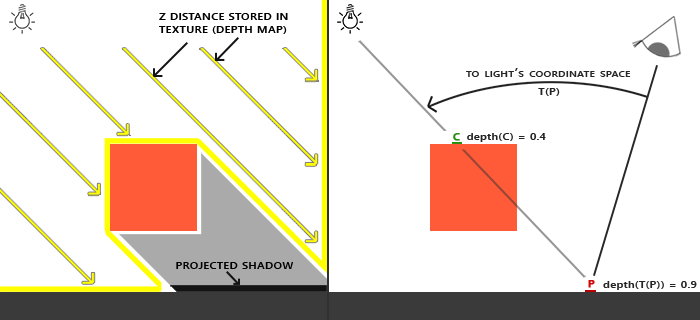

A shadow is created when one mesh occludes another in the path of a light source. The way to achieve this is to render a depth texture and then render the closest mesh in the path of the light source. This is done repeatedly for each light source, and the resulting images are saved as textures in a framebuffer.

By comparing the depth of a mesh to the closest depth to the light source, we can determine whether the mesh is the closest or not.

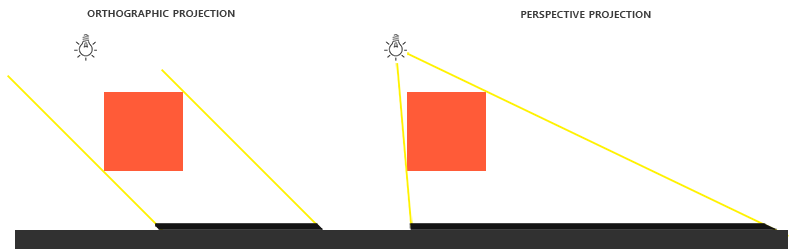

shadow projection

- orthographic: use on direction light like sun.

- prespective: use on point light like flash.

lighting compose

The deferred lighting is based on the G-buffer (which stores information like position and normal on a texture), and calculates the lighting in screen space. Each light is rendered as a separate layer. However, there is a problem with adding lighting layer by layer in the same texture, as it can cause race conditions due to simultaneous reading and writing.

Interestingly, when the VRAM in GPU is fast enough, which means that reading is faster than writing, the race condition may not occur. This is why we were initially confused about this problem, as it did not show up on my computer but did on another computer.

| Lighting Type | Image |

|---|---|

| Point Light |  |

| Point and Direction Light |  |

| Point, Direction, and Ambient Light |  |

postprocess

the postprocess effect on render often relia on the mesh information for example like outliner need a mesh id mask for edge delection.

distance fog from minecraft

our scene

our scene

use distance to blend with fog color because use the deferred render it is easy to acess depth and position from scene

deferred rendering

:::spoiler images each G-Buffer

| Render Type | Image |

|---|---|

| Albedo |  |

| Normal |  |

| Arm |  |

| Depth |  |

| ID |  |

| Lighting |  |

:::

computer networking

delay

circuit switching(FDM,TDM)

Frequency Division Multiplexing (FDM)

optical, electromagnetic frequencies divided into (narrow) frequency bands = each call allocated its own band, can transmit at max rate of that narrow band

Time Division Multiplexing (TDM)

time divided into slots = each call allocated periodic slot(s), can transmit at maximum rate of (wider) frequency band, but only during its time slot(s)

applicaion layer

http

http/1.0

- TCP connection opened

- at most one object sent over TCP connection

- Non-persistent HTTP。

http/1.1

- pipelined GETs over single TCP connection

- persistent HTTP。

http/2

- multiple, pipelined GETs over single TCP connection

- push unrequested objects to clien

http/3

This is an advanced transport protocol that operates on top of UDP. QUIC incorporates features like reduced connection and transport latency, multiplexing without head-of-line blocking, and improved congestion control. It integrates encryption by default, providing a secure connection setup with a single round-trip time (RTT).

persistent http

- keep connetion of TCP

- half time of tramit

email SMTP POP3 IMAP

SMTP

mail server send to mail server

POP3

muil-user download email

IMAP

will delete email that user download

| http | smtp | |

|---|---|---|

| pull | push | |

| encode | ASCII | ASCII |

| multiple obj | encapsulated in its own response message | multiple objects sent in multipart message |

DNS

- hostname to IP address translation

- host aliasing

- mail server aliasing

- take but 2 RTT(one for make connection one for return IP info) to get send IP back

socket

use this four tuple to identified TCP packet

(source IP, source port, destination IP, destination port)

UDP

- no handshaking before sending data

- sender explicitly attaches IP destination address and port # to each packet

- receiver extracts sender IP address and port# from received packe

- transmitted data may be lost or received out-of-order

TCP

- reliable, byte stream-oriented

- server process must first be running

- server must have created socket (door) that welcomes client’s contact

- TCP 3-way handshake

Multimedia video:

- DASH: Dynamic, Adaptive Streaming over HTT

- CBR: (constant bit rate): video encoding rate fixed

- VBR: (variable bit rate): video encoding rate changes as amount of spatial, temporal coding changes

principles of reliable data transfer

- finite state machines (FSM)

- Sequence numbers:

- byte stream “number” of first byte in segment’s data

- Acknowledgements:

- seq # of next byte expectet from other side

- cumulative ACK

Go Back N

the all the packet that behind is overtime or NAK

Selective repeat

only resend the packet overtime or NAK

stop and wait

rdt(reliable data transfer)

rdt 1.0

- rely on channel perfectly reliable

rdt 2.0

- checksum to detect bit errors

- acknowledgements (ACKs): receiver explicitly tells sender that pkt received OK

- negative acknowledgements (NAKs): receiver explicitly tells sender that pkt had errors

- stop and wait: sender sends one packet, then waits for receiver response

rdt 2.1

在每份封包加入序號(sequence number) 來編號,因此接收端可以藉由序號判斷現在收到的封包是不是重複的,若重複則在回傳一次ACK回去,而這裡的序號用1位元就夠了(0和1交替)。

-

sender

-

receiver

rdt 2.2

rdt2.2不再使用NAK,而是在ACK加入序號的訊息,所以在接收端的make_pkt()加入參數ACK,0或ACK,1,而在傳送端則是要檢查所收到ACK的序號。

rdt 3.0

- add timer .If sender not receive ACK then resend

TCP flow control

receiver controls sender, so sender won’t overflow receiver’s buffer by transmitting too much, too fast

window buffer size

if the receiver window buffer is fill then sender should stop sending until receiver window buffer is free again

SampleRTT

- measured time from segment transmission until ACK receipt

- ignore retransmissions

- SampleRTT will vary, want estimated RTT “smoother” average several recent measurements, not just current SampleRTT

TCP RTT(round trip time), timeout

- use EWMA(exponential weighted moving average)

- influence of past sample decreases exponentially fast

- typical value: = 0.125

TCP congestion control AIMD(Additive Increase Multiplicative Decrease)

- send cwnd bytes,wait RTT for ACKS, then send more bytes

slow start

increase rate exponentially until first loss event

- initially cwnd = 1 MSS

- double cwnd every RTT

- done by incrementing cwnd for every ACK received

CUBIC (briefly)

use the cubic function(三次函數) to predict bottlenck

3 duplicate ack

if recevie 3 ACK of same packet then see as reach bottlenck

congestion avoidance AIMD(Additive Increase Multiplicative Decrease)

Network Layer:

- functions

- network-layer functions:

- forwarding: move packets from a router’s input link to appropriate router output link

- routing: determine route taken by packets from source to destination

- routing algorithms

- analogy: taking a trip

- forwarding: process of getting through single interchange

- routing: process of planning trip from source to destination

- network-layer functions:

- data plane and control plane

- Data plane:

- local, per-router function

- determines how datagram arriving on router input port is forwarded to router output port

- forwarding: move packets from router’s input to appropriate router output

- Control plane:

- network-wide logic

- routing: determine route taken by packets from source to destination

- determines how datagram is routed among routers along end- end path from source host to destination host

- two control-plane approaches:

- traditional routing algorithms: implemented in routers

- software-defined networking Software-Defined Networking((SDN): implemented in (remote) servers

- Data plane:

Network layer:Data Plane(CH4)

Router

- input port queuing: if datagrams arrive faster than forwarding rate into switch fabri

- destination-based forwarding: forward based only on destination IP address (traditional)

- generalized forwarding: forward based on any set of header field values

Input port functions

Destination-based forwarding(Longest prefix matching)

- when looking for forwarding table entry for given destination address, use longest address prefix that matches destination address.

Switching fabrics

- transfer packet

- from input link to appropriate output link

- switching rate:

- rate at which packets can be transfer from

Input Output queuing

- input

- If switch fabric slower than input ports combined -> queueing may occur at input queues

- queueing delay and loss due to input buffer overflow!

- Head-of-the-Line (HOL) blocking: queued datagram at front of queue prevents others in queue from moving forward

- If switch fabric slower than input ports combined -> queueing may occur at input queues

- output

- Buffering

- drop policy: which datagrams to drop if no free buffers?

- Datagrams can be lost due to congestion, lack of buffers

- Priority scheduling – who gets best performance, network neutrality

- Buffering

buffer management

- drop: which packet to add,drop when buffers are full

- tail drop: drop arriving packet

- priority: drop/remove on priority basis

- marking: which packet to mark to signal congestion(ECN,RED)

packet scheduling

-

FCFS(first come, first served)

-

priority

- priority queue

-

RR(round robin)

- arriving traffic classified, queued by class(any header fields can be used for classification)

- server cyclically, repeatedly scans class queues, sending one complete packet from each class (if available) in turn

-

WFQ(Weighted Fair Queuing)

- round robin but each class, , has weight, , and gets weighted amount of service in each cycle

IP (Internet Protoco)

IP Datagram

IP address

- IP address: 32-bit identifier associated with each host or router interface

- interface: connection between host/router and physical link

- router’s typically have multiple interfaces

- host typically has one or two interfaces (e.g., wired Ethernet, wireless 802.11)

Subnets

- in same subnet if:

- device interfaces that can physically reach each other without passing through an intervening router

CIDR(Classless InterDomain Routing)

address format: a.b.c.d/x, where x is # bits in subnet portion of address

DHCP

host dynamically obtains IP address from network server when it “joins” network

- host broadcasts DHCP discover msg [optional]

- DHCP server responds with DHCP offer msg [optional]

- host requests IP address: DHCP request msg

- DHCP server sends address: DHCP ack msg

NAT(Network Address Translation)

- NAT has been controversial:

- routers “should” only process up to layer 3

- address “shortage” should be solved by IPv6

- violates end-to-end argument (port # manipulation by network-layer device)

- NAT traversal: what if client wants to connect to server behind NAT?

- but NAT is here to stay:

- extensively used in home and institutional nets, 4G/5G cellular net

IP6

tunneling:Transition from IPv4 to IPv6

IPv6 datagram carried as payload in IPv4 datagram among IPv4 routers (“packet within a packet”)

Generalized forwarding(flow table)

flow table entries

- match+action: abstraction unifies different kinds of devices

| Device | Match | Action |

|---|---|---|

| Router | Longest Destination IP Prefix | Forward out a link |

| Switch | Destination MAC Address | Forward or flood |

| Firewall | IP Addresses and Port Numbers | Permit or deny |

| NAT | IP Address and Port | Rewrite address and port |

middleboxes

Network layer:Control Plane(CH5)

- structuring network control plane:

- per-router control (traditional)

- logically centralized control (software defined networking)

Routing protocols

Dijkstra’s link-state routing algorithm

- centralized: network topology, link costs known to all node

- iterative: after k iterations, know least cost path to k destinations

Distance vector algorithm(Bellman-Ford)

- from time-to-time, each node sends its own distance vector estimate to neighbors

- under natural conditions, the estimate Dx(y) converge to the actual least cost dx(y)

- when x receives new DV estimate from any neighbor, it updates its own DV using B-F equation

- link cost changes:

- node detects local link cost change

- updates routing info, recalculates local DV

- if DV changes, notify neighbors

Intra-AS routing

- RIP: Routing Information Protocol [RFC 1723]

- classic DV: DVs exchanged every 30 secs

- no longer widely used

- EIGRP: Enhanced Interior Gateway Routing Protocol

- DV based

- formerly Cisco-proprietary for decades (became open in 2013 [RFC 7868])

- OSPF: Open Shortest Path First [RFC 2328]

- classic link-state

- each router floods OSPF link-state advertisements (directly over IP rather than using TCP/UDP) to all other routers in entire AS

- multiple link costs metrics possible: bandwidth, delay

- each router has full topology, uses Dijkstra’s algorithm to compute forwarding table

- security: all OSPF messages authenticated (to prevent malicious intrusion)

- classic link-state

Inter-AS routing

- BGP (Border Gateway Protocol)inter-domain routing protocol:

- eBGP: obtain subnet reachability information from neighboring ASes

- iBGP: propagate reachability information to all AS-internal routers

Why different Intra-AS, Inter-AS routing ?

| Criteria | Intra-AS | Inter-AS |

|---|---|---|

| Policy | Single admin, no policy decisions needed | Admin wants control over how traffic is routed, and who routes through its network |

| Scale | Hierarchical routing saves table size, reduced update traffic | |

| Performance | Can focus on performance | Policy dominates over performance |

ICMP: internet control message protocol

- used by hosts and routers to communicate network-level information

- error reporting: unreachable host, network, port, protocol

- echo request/reply (used by ping)

- network-layer “above: IP:

- ICMP messages carried in IP datagrams

- ICMP message: type, code plus first 8 bytes of IP datagram causing error

Link layer and LAN(CH6)

- flow control:

- pacing between adjacent sending and receiving nodes

- error detection:

- errors caused by signal attenuation, noise.

- receiver detects errors, signals retransmission, or drops frame

- error correction:

- receiver identifies and corrects bit error(s) without retransmission

- half-duplex and full-duplex:

- with half duplex, nodes at both ends of link can transmit, but not at sam

error detection, correction

- Internet checksum

- Parity checking

- Cyclic Redundancy Check (CRC)

Parity checking

Cyclic Redundancy Check (CRC)

MAC protocol(multiple access)

- channel partitioning

- time division

- frequency division

- random access MAC protocol

- ALOHA, slotted ALOHA

- CSMA

- CSMA/CD: ethernet

- CSMA/CA: 802.11

- takeing turns

- polling(token passing)

- bluetooth,token ring

slotted ALOHA

- time divided into equal size slots (time to transmit 1 frame)

- nodes are synchronized

- if collision: node retransmits frame in each subsequent slot with probability until success

- max efficiency = = 0.37

Pure ALOHA

CSMA

- CSMA:

- if channel sensed idle: transmit entire frame • if channel sensed busy: defer transmission

- CSMA/CD(Collision Detection):

- collision detection easy in wired, difficult with wireless

- if collisions detected within short then send

abort,jam signal - After aborting, NIC enters binary (exponential) backoff:

- after mth collision, NIC chooses K at random from {0,1,2, …, 2m-1}. NIC waits bit times

- more collisions: longer backoff interval

LAN

ARP: address resolution protocol

determine interface’s MAC address by its IP address

- ARP table: each IP node (host, router) on LAN has table

- IP/MAC address mappings for some LAN nodes: < IP address; MAC address; TTL>

- TTL (Time To Live): time after which address mapping will be forgotten (typically 20 min)

- destination MAC address =

FF-FF-FF-FF-FF-FF

switch

switch table

computer networking

delay

circuit switching(FDM,TDM)

Frequency Division Multiplexing (FDM)

optical, electromagnetic frequencies divided into (narrow) frequency bands = each call allocated its own band, can transmit at max rate of that narrow band

Time Division Multiplexing (TDM)

time divided into slots = each call allocated periodic slot(s), can transmit at maximum rate of (wider) frequency band, but only during its time slot(s)

applicaion layer

http

http/1.0

- TCP connection opened

- at most one object sent over TCP connection

- Non-persistent HTTP。

http/1.1

- pipelined GETs over single TCP connection

- persistent HTTP。

http/2

- multiple, pipelined GETs over single TCP connection

- push unrequested objects to clien

http/3

This is an advanced transport protocol that operates on top of UDP. QUIC incorporates features like reduced connection and transport latency, multiplexing without head-of-line blocking, and improved congestion control. It integrates encryption by default, providing a secure connection setup with a single round-trip time (RTT).

persistent http

- keep connetion of TCP

- half time of tramit

email SMTP POP3 IMAP

SMTP

mail server send to mail server

POP3

muil-user download email

IMAP

will delete email that user download

| http | smtp | |

|---|---|---|

| pull | push | |

| encode | ASCII | ASCII |

| multiple obj | encapsulated in its own response message | multiple objects sent in multipart message |

DNS

- hostname to IP address translation

- host aliasing

- mail server aliasing

- take but 2 RTT(one for make connection one for return IP info) to get send IP back

socket

use this four tuple to identified TCP packet

(source IP, source port, destination IP, destination port)

UDP

- no handshaking before sending data

- sender explicitly attaches IP destination address and port # to each packet

- receiver extracts sender IP address and port# from received packe

- transmitted data may be lost or received out-of-order

TCP

- reliable, byte stream-oriented

- server process must first be running

- server must have created socket (door) that welcomes client’s contact

- TCP 3-way handshake

Multimedia video:

- DASH: Dynamic, Adaptive Streaming over HTT

- CBR: (constant bit rate): video encoding rate fixed